A new climate science study, involving a panel of 17 experts from 13 countries, has just been published in the scientific journal, Atmosphere. The study looked at the various data adjustments that are routinely applied to the European temperature records in the widely used Global Historical Climatology Network (GHCN) dataset over the last 10 years.

The GHCN monthly temperature dataset is the main data source for thermometer records used by several of the groups calculating global warming – including NOAA, NASA Goddard Institute for Space Studies (GISS) and the Japan Meteorological Agency (JMA).

These thermometer records can vary in length from decades to over a century. Over these long periods, the temperature records from individual weather stations often contain abrupt changes due to local factors that have nothing to do with global or national temperature trends. For example, changes in the location of the weather station, the types of thermometers used or the growth of urban heat islands around the station.

To try to correct for these non-climatic biases, NOAA, who maintain the GHCN dataset have been running a computer program to identify abrupt jumps in the records using statistical methods. Whenever the program identifies an abrupt jump it applies an adjustment to remove that jump from the station’s record. This process is called temperature homogenization. Until now, most scientists have assumed the process is generally working correctly.

In this new study, the authors analyzed thousands of different versions of the dataset downloaded over 10 years. They studied the homogenization adjustments for more than 800 European temperature records. They found that these adjustments changed dramatically every day when NOAA re-ran their computer program.

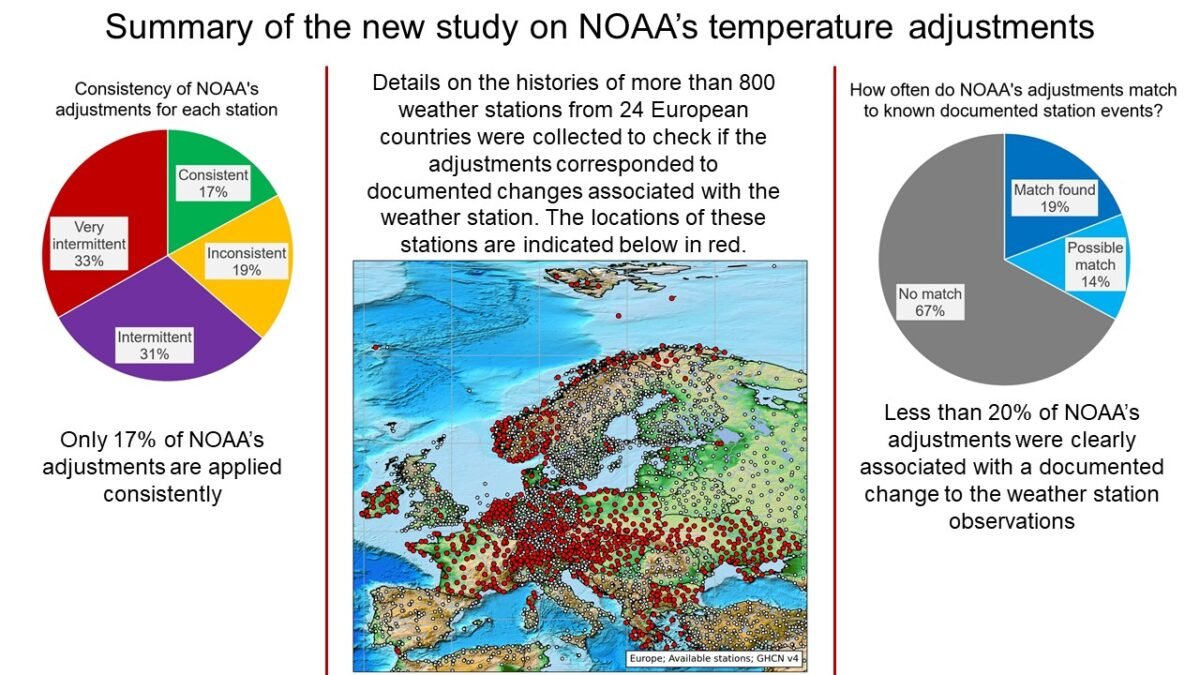

The authors found that only 17% of NOAA’s adjustments were consistent from run to run.

Furthermore, by compiling historical records known as station history metadata for each of the stations, they were able to compare the adjustments applied by NOAA’s computer program to the documented changes that were known to have occurred at the weather station. They found that less than 20% of the adjustments NOAA had been applying corresponded to any event noted by the station observers – such as a change in instrumentation or a station move.

The findings of the study show that most of the homogenization adjustments carried out by NOAA have been surprisingly inconsistent.

Moreover, every day, as the latest updates to the thermometer records arrive, the adjustments NOAA applies to the entire dataset are recalculated and changed. As a result, for any given station, e.g., Cheb, Czech Republic, the official homogenized temperatures for 1951 (as an example) might be very different in Tuesday’s dataset than in Monday’s dataset.

The study itself was not focused on the net effects of these adjustments on long-term climate trends. However, the authors warned that these bizarre inconsistencies in this widely-used climate dataset are scientifically troubling. They also are concerned that most researchers using this important dataset have been unaware of these problems until now.

The authors conclude their study by making various recommendations for how these problems might be resolved, and how the temperature homogenization efforts can be improved in the future.

Details on the study

Evaluation of the Homogenization Adjustments Applied to European Temperature Records in the Global Historical Climatology Network Dataset

by Peter O’Neill, Ronan Connolly, Michael Connolly, Willie Soon, Barbara Chimani, Marcel Crok, Rob de Vos, Hermann Harde, Peter Kajaba, Peter Nojarov, Rajmund Przybylak, Dubravka Rasol, Oleg Skrynyk, Olesya Skrynyk, Petr Štěpánek, Agnieszka Wypych and Pavel Zahradníček

Atmosphere 2022, 13(2), 285; https://doi.org/10.3390/atmos13020285